You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Widescreen Monitors & Stuff

- Thread starter Sarge

- Start date

OK, well it depends on what kind of monitors you have. If they're both DVI, you'll need a VGA-to-DVI converter. If they're both VGA, you'll need a VGA-to-DVI converter (one should have come with your video card). If they're a VGA and a DVI, then you're all set.

Sarge

Distinguished Member

Haha I know what you mean, I have AMD Athlon 64 3000+ now, so I know what you mean, BUT, nothing stops me from installing x86 OS, like now... I had Ubuntu x64 and it was REALLY HELL finding applications, at the end I gave up and installed x86 Ubuntu and everything is alright. So, I can intall any x86 OS I want, no problems

I shamelessly stole this off the web....LOL

VGA: http://en.wikipedia.org/wiki/VGA

DVI: http://en.wikipedia.org/wiki/Dvi

The main difference is that VGA is an analog standard for computer

monitors that was first marketed in 1987, whereas DVI is a newer and

superior digital technology that has the potential to provide a much

better picture.

Here are two relevant excerpts from Wikipedia for your convenience:

"Existing standards, such as VGA, are analog and designed for CRT

based devices. As the source transmits each horizontal line of the

image, it varies its output voltage to represent the desired

brightness. In a CRT device, this is used to vary the intensity of the

scanning beam as it moves across the screen. However, in digital

displays, instead of a scanning beam there is an array of pixels and a

single brightness value must be chosen for each. The decoder does this

by sampling the voltage of the input signal at regular intervals. When

the source is also a digital device (such as a computer), this can

lead to distortion if the samples are not taken at the centre of each

pixel, and in general the crosstalk between adjacent pixels is high."

SOURCE: http://en.wikipedia.org/wiki/Dvi

"DVI takes a different approach. The desired brightness of the pixels

is transmitted as a list of binary numbers. When the display is driven

at its native resolution, all it has to do is read each number and

apply that brightness to the appropriate pixel. In this way, each

pixel in the output buffer of the source device corresponds directly

to one pixel in the display device, whereas with an analog signal the

appearance of each pixel may be affected by its adjacent pixels as

well as by electrical noise and other forms of analog distortion."

SOURCE: http://en.wikipedia.org/wiki/Dvi

VGA: http://en.wikipedia.org/wiki/VGA

DVI: http://en.wikipedia.org/wiki/Dvi

The main difference is that VGA is an analog standard for computer

monitors that was first marketed in 1987, whereas DVI is a newer and

superior digital technology that has the potential to provide a much

better picture.

Here are two relevant excerpts from Wikipedia for your convenience:

"Existing standards, such as VGA, are analog and designed for CRT

based devices. As the source transmits each horizontal line of the

image, it varies its output voltage to represent the desired

brightness. In a CRT device, this is used to vary the intensity of the

scanning beam as it moves across the screen. However, in digital

displays, instead of a scanning beam there is an array of pixels and a

single brightness value must be chosen for each. The decoder does this

by sampling the voltage of the input signal at regular intervals. When

the source is also a digital device (such as a computer), this can

lead to distortion if the samples are not taken at the centre of each

pixel, and in general the crosstalk between adjacent pixels is high."

SOURCE: http://en.wikipedia.org/wiki/Dvi

"DVI takes a different approach. The desired brightness of the pixels

is transmitted as a list of binary numbers. When the display is driven

at its native resolution, all it has to do is read each number and

apply that brightness to the appropriate pixel. In this way, each

pixel in the output buffer of the source device corresponds directly

to one pixel in the display device, whereas with an analog signal the

appearance of each pixel may be affected by its adjacent pixels as

well as by electrical noise and other forms of analog distortion."

SOURCE: http://en.wikipedia.org/wiki/Dvi

Attachments

The pic on the left is the VGA one and it is slightly smaller than the DVI one and most monitors whether CRT or LCD will work on it, sometimes needing an adapter.

The difference is that the DVI one is digital and therefore gives a better signal and picture when using an appropriate digital monitor.

I have an LCD monitor but it isn't digital - great picture though.

That monitor I said that I would like to get has two separate connectors - one for VGA and one for DVI so is dual standard.

I'm sure one would have to be an absolute connoisseur to know the difference just by looking at a screen.

The difference is that the DVI one is digital and therefore gives a better signal and picture when using an appropriate digital monitor.

I have an LCD monitor but it isn't digital - great picture though.

That monitor I said that I would like to get has two separate connectors - one for VGA and one for DVI so is dual standard.

I'm sure one would have to be an absolute connoisseur to know the difference just by looking at a screen.

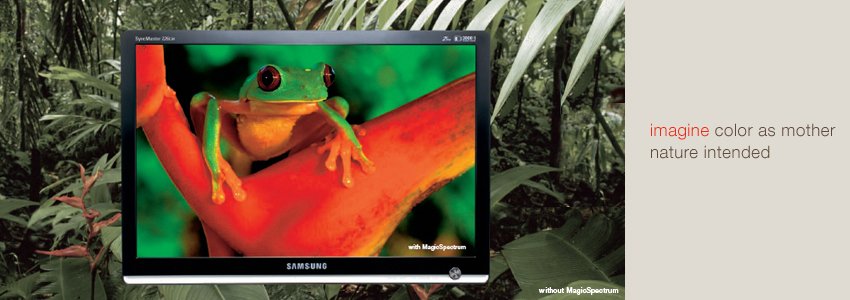

Samsung have just announced their latest and most expensive monitors...with "Magic Spectrum".

More info at http://magicspectrum.com

More info at http://magicspectrum.com

Attachments

Sarge

Distinguished Member

4 days ago, I was doing stuff as always... and in one moment I see the thing that let's you see how power is gong and it keeps it normal if it's to low or to high, I don't know how do they call it but it's not UPS. Anyways... I see that thing started going like "click click beeb click" something like that, and I drop a look, and I see 270 vats (220v is normal power) And I monitor started acting strange... I ran to turn it off.. in a moment I pressed the button on monitor I get strong sound of BAM! like something exploded inside... tand I fast turn off the computer on reset button and the one above. That was end of my samsung SyncMaster 753s 17"... I was offline for few days (dieing without internet) and I was pretty nerves even, I can't live without internet .. maybe I'm addicted to it.. anyways... I took the monitor to the repair store or something, and they tried everything to make it work but it was dead. Totally and they couldn't fixed even if they were trying for 2 days and then suggested me to drop it and get new one. So, now I'm using old 15" COMPAQ who is working with higher resolutions then 1024x768 at 75hz refresh rate :S I was like OMG this thing is small but strong... I have to live with it for about a month or 2 max, to while I get new Samsung TFT 19" wide screen, not sure what is the model I just saw it and said... "that one, please"

Last edited:

OMG...what a mess! Your electricity is 230v anyway isn't it? In North America nearly everything is 110v, except for the kitchen oven, laundry dryer and my air conditioners which are 220v.

You should make sure that you have surge protectors on all your "delicate" electronics. All my computer stuff is plugged into one and my TV/sound system into another one.

Power surges shouldn't happen but they can create a lot of damage when they do.

I'm so sorry to hear that, Samsung Syncmaster was my first monitor before the one I have now.

You should make sure that you have surge protectors on all your "delicate" electronics. All my computer stuff is plugged into one and my TV/sound system into another one.

Power surges shouldn't happen but they can create a lot of damage when they do.

I'm so sorry to hear that, Samsung Syncmaster was my first monitor before the one I have now.