How do you define an OS as stable?

XP was stable in that you could do an honest day’s work without having to save it every couple of seconds in fear of a BSOD. Windows 2000 was stable: it gave the users what they expected, and once they got it working (driver issues mostly), it remained stable.

One can’t honestly call Vista stable in that way however. It’s largely a hit-or-miss process, and Vista either works or doesn’t. Once it works, it might just stop working, you never know. But Vista is stable – very stable.

In Windows XP (x86) if XP went up to over 26-27 thousand memory handles open at once, it would just fail. The entire operating system bogs down, and even after you get the handles down to a more manageable size, it remained slow and unresponsive unless a reboot was performed. Windows XP x64 used 64-bit technology to raise the bar to an amazing 35-38 thousand handles limit – from experience.

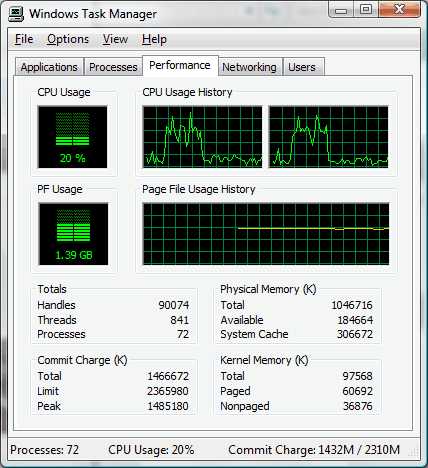

But when we saw this on Vista, we were amazed. Doing nothing out of the extra-ordinary, only running Photoshop CS2 and installing Vistual Studio on a decent machine with a gigabyte of memory (upgraded to two GB since, but one gigabyte should suffice and then some), what we saw was simply amazing. About 80% of the memory was in use – which wasn’t unusual. We tried to see what was happening, because there was noticeable lag to the interface, and screen-unlock times took time – that’s when we looked at the open memory handles – and we were shocked.

Ninety-thousand open memory handles. Something a 64-bit Itanium system running Windows Server 2003 can’t handle easily. Three times the x64 limit on XP… All ninety thousand handles on a single 1GB x86 machine with Windows Vista installed.

The amazing thing is, we barely felt it. The system was useable. And as soon as we closed all the processes down, the handels instantly went back to twenty-six thousand handles, a more manageable size, and the impact was gone. Unlike XP, a restart wasn’t necessary.

But that’s scary. It’s wonderful to be at 90k handles and not have to even bat an eye, but nevertheless, to see that shutting down explorer.exe and a couple of generic svchost.exe processes sent it back down, it means there is something seriously wrong in the kernel and low-level processes – that’s thankfully countered by the amazing memory-management features of Windows Vista on an x86 machine.

[digg]

You’re right.

Pardon?

Windows Vista’s GDI limits have been improved as well allowing for the higher handle number.

Windows XP can and will handle it if you do some modifications, as will Windows Server 2003 with Service Pack 1.

I like what you said about Windows 2000 – I had an older IBM ThinkPad running Windows 2000 for about 2 1/2 years without any problems or crashes and that laptop even got dropped down a flight of stairs while it was on. Windows XP can prove to be more reliable than Windows 2000 in certain environments, however.

Hello Kris 😀

You say XP can manage 90k handles with heavy tweaking – do you have any idea how much Linux or Mac (BSD) can?

I’m just wondering if it’s an architectural inevitability of the x86 processors, or software-engineering error.

I recall installing visual studio 2005 and handle count went to about 46K on my P4 system with 768MB DDR RAM. It went back to about 8K after installation and I was able to use my system without any speed bumps or reboots. I don’t know where did you get that limit of 30K handles on a windows xp machine. I haven’t ever performed any performance tweaks on that system.

Hello Tanveer.

I’m not sure that’s possible: Windows XP boots at 10-15k handles…. and running any programs gets it quite a bit higher – 8k is quite low (like Windows 2000!)